Batches: 0%| | 0/191 [00:00<?, ?it/s]Batches: 1%| | 1/191 [00:01<03:38, 1.15s/it]Batches: 1%| | 2/191 [00:01<01:57, 1.61it/s]Batches: 2%|▏ | 3/191 [00:01<01:22, 2.28it/s]Batches: 2%|▏ | 4/191 [00:01<01:03, 2.94it/s]Batches: 3%|▎ | 5/191 [00:01<00:53, 3.50it/s]Batches: 3%|▎ | 6/191 [00:02<00:46, 4.00it/s]Batches: 4%|▎ | 7/191 [00:02<00:40, 4.53it/s]Batches: 4%|▍ | 8/191 [00:02<00:36, 4.97it/s]Batches: 5%|▍ | 9/191 [00:02<00:33, 5.45it/s]Batches: 5%|▌ | 10/191 [00:02<00:30, 5.84it/s]Batches: 6%|▌ | 11/191 [00:02<00:29, 6.10it/s]Batches: 6%|▋ | 12/191 [00:03<00:28, 6.37it/s]Batches: 7%|▋ | 13/191 [00:03<00:28, 6.29it/s]Batches: 7%|▋ | 14/191 [00:03<00:26, 6.68it/s]Batches: 8%|▊ | 15/191 [00:03<00:26, 6.62it/s]Batches: 8%|▊ | 16/191 [00:03<00:25, 6.73it/s]Batches: 9%|▉ | 17/191 [00:03<00:25, 6.74it/s]Batches: 9%|▉ | 18/191 [00:03<00:26, 6.62it/s]Batches: 10%|▉ | 19/191 [00:04<00:26, 6.56it/s]Batches: 10%|█ | 20/191 [00:04<00:25, 6.59it/s]Batches: 11%|█ | 21/191 [00:04<00:25, 6.69it/s]Batches: 12%|█▏ | 22/191 [00:04<00:25, 6.74it/s]Batches: 12%|█▏ | 23/191 [00:04<00:25, 6.72it/s]Batches: 13%|█▎ | 24/191 [00:04<00:24, 6.70it/s]Batches: 13%|█▎ | 25/191 [00:05<00:24, 6.70it/s]Batches: 14%|█▎ | 26/191 [00:05<00:22, 7.18it/s]Batches: 14%|█▍ | 27/191 [00:05<00:23, 7.06it/s]Batches: 15%|█▍ | 28/191 [00:05<00:23, 6.97it/s]Batches: 15%|█▌ | 29/191 [00:05<00:23, 6.93it/s]Batches: 16%|█▌ | 30/191 [00:05<00:22, 7.06it/s]Batches: 16%|█▌ | 31/191 [00:05<00:22, 7.00it/s]Batches: 17%|█▋ | 32/191 [00:05<00:21, 7.39it/s]Batches: 17%|█▋ | 33/191 [00:06<00:21, 7.42it/s]Batches: 18%|█▊ | 34/191 [00:06<00:28, 5.55it/s]Batches: 18%|█▊ | 35/191 [00:06<00:26, 5.95it/s]Batches: 19%|█▉ | 36/191 [00:06<00:25, 5.98it/s]Batches: 19%|█▉ | 37/191 [00:06<00:26, 5.91it/s]Batches: 20%|█▉ | 38/191 [00:07<00:25, 6.10it/s]Batches: 20%|██ | 39/191 [00:07<00:22, 6.68it/s]Batches: 21%|██ | 40/191 [00:07<00:21, 7.16it/s]Batches: 21%|██▏ | 41/191 [00:07<00:19, 7.76it/s]Batches: 22%|██▏ | 42/191 [00:07<00:20, 7.20it/s]Batches: 23%|██▎ | 43/191 [00:07<00:21, 6.97it/s]Batches: 23%|██▎ | 44/191 [00:07<00:20, 7.11it/s]Batches: 24%|██▎ | 45/191 [00:07<00:19, 7.31it/s]Batches: 24%|██▍ | 46/191 [00:08<00:19, 7.53it/s]Batches: 25%|██▍ | 47/191 [00:08<00:19, 7.34it/s]Batches: 25%|██▌ | 48/191 [00:08<00:20, 6.84it/s]Batches: 26%|██▌ | 49/191 [00:08<00:20, 7.01it/s]Batches: 26%|██▌ | 50/191 [00:08<00:18, 7.43it/s]Batches: 27%|██▋ | 51/191 [00:08<00:19, 7.04it/s]Batches: 27%|██▋ | 52/191 [00:08<00:18, 7.69it/s]Batches: 28%|██▊ | 53/191 [00:09<00:18, 7.32it/s]Batches: 28%|██▊ | 54/191 [00:09<00:17, 7.85it/s]Batches: 29%|██▉ | 55/191 [00:09<00:16, 8.22it/s]Batches: 29%|██▉ | 56/191 [00:09<00:15, 8.44it/s]Batches: 30%|██▉ | 57/191 [00:09<00:15, 8.48it/s]Batches: 30%|███ | 58/191 [00:09<00:15, 8.76it/s]Batches: 31%|███ | 59/191 [00:09<00:14, 8.95it/s]Batches: 32%|███▏ | 61/191 [00:09<00:13, 9.60it/s]Batches: 33%|███▎ | 63/191 [00:10<00:12, 9.94it/s]Batches: 34%|███▍ | 65/191 [00:10<00:12, 10.23it/s]Batches: 35%|███▌ | 67/191 [00:10<00:11, 10.35it/s]Batches: 36%|███▌ | 69/191 [00:10<00:11, 10.19it/s]Batches: 37%|███▋ | 71/191 [00:10<00:11, 10.09it/s]Batches: 38%|███▊ | 73/191 [00:11<00:14, 8.29it/s]Batches: 39%|███▊ | 74/191 [00:11<00:13, 8.52it/s]Batches: 39%|███▉ | 75/191 [00:11<00:13, 8.69it/s]Batches: 40%|████ | 77/191 [00:11<00:12, 9.32it/s]Batches: 41%|████▏ | 79/191 [00:11<00:11, 9.91it/s]Batches: 42%|████▏ | 81/191 [00:11<00:11, 9.90it/s]Batches: 43%|████▎ | 83/191 [00:12<00:10, 10.46it/s]Batches: 45%|████▍ | 85/191 [00:12<00:10, 10.33it/s]Batches: 46%|████▌ | 87/191 [00:12<00:09, 10.53it/s]Batches: 47%|████▋ | 89/191 [00:12<00:09, 10.77it/s]Batches: 48%|████▊ | 91/191 [00:12<00:09, 11.09it/s]Batches: 49%|████▊ | 93/191 [00:13<00:08, 11.49it/s]Batches: 50%|████▉ | 95/191 [00:13<00:08, 11.48it/s]Batches: 51%|█████ | 97/191 [00:13<00:07, 11.83it/s]Batches: 52%|█████▏ | 99/191 [00:13<00:07, 11.96it/s]Batches: 53%|█████▎ | 101/191 [00:13<00:07, 12.06it/s]Batches: 54%|█████▍ | 103/191 [00:13<00:07, 12.02it/s]Batches: 55%|█████▍ | 105/191 [00:14<00:07, 11.97it/s]Batches: 56%|█████▌ | 107/191 [00:14<00:06, 12.12it/s]Batches: 57%|█████▋ | 109/191 [00:14<00:06, 11.91it/s]Batches: 58%|█████▊ | 111/191 [00:14<00:06, 11.99it/s]Batches: 59%|█████▉ | 113/191 [00:14<00:06, 11.95it/s]Batches: 60%|██████ | 115/191 [00:14<00:06, 12.30it/s]Batches: 61%|██████▏ | 117/191 [00:14<00:06, 12.30it/s]Batches: 62%|██████▏ | 119/191 [00:15<00:05, 12.29it/s]Batches: 63%|██████▎ | 121/191 [00:15<00:05, 12.56it/s]Batches: 64%|██████▍ | 123/191 [00:15<00:05, 13.02it/s]Batches: 65%|██████▌ | 125/191 [00:15<00:05, 13.13it/s]Batches: 66%|██████▋ | 127/191 [00:15<00:04, 13.35it/s]Batches: 68%|██████▊ | 129/191 [00:15<00:04, 12.98it/s]Batches: 69%|██████▊ | 131/191 [00:16<00:04, 12.84it/s]Batches: 70%|██████▉ | 133/191 [00:16<00:04, 13.52it/s]Batches: 71%|███████ | 135/191 [00:16<00:04, 13.84it/s]Batches: 72%|███████▏ | 137/191 [00:16<00:03, 14.09it/s]Batches: 73%|███████▎ | 139/191 [00:16<00:03, 14.00it/s]Batches: 74%|███████▍ | 141/191 [00:16<00:03, 14.04it/s]Batches: 75%|███████▍ | 143/191 [00:16<00:03, 13.52it/s]Batches: 76%|███████▌ | 145/191 [00:17<00:03, 13.39it/s]Batches: 77%|███████▋ | 147/191 [00:17<00:03, 12.74it/s]Batches: 78%|███████▊ | 149/191 [00:17<00:03, 12.74it/s]Batches: 79%|███████▉ | 151/191 [00:17<00:03, 12.71it/s]Batches: 80%|████████ | 153/191 [00:17<00:02, 12.99it/s]Batches: 81%|████████ | 155/191 [00:17<00:02, 12.55it/s]Batches: 82%|████████▏ | 157/191 [00:18<00:02, 13.31it/s]Batches: 83%|████████▎ | 159/191 [00:18<00:02, 13.88it/s]Batches: 84%|████████▍ | 161/191 [00:18<00:02, 13.66it/s]Batches: 85%|████████▌ | 163/191 [00:18<00:02, 13.62it/s]Batches: 86%|████████▋ | 165/191 [00:18<00:01, 13.74it/s]Batches: 87%|████████▋ | 167/191 [00:18<00:01, 14.15it/s]Batches: 88%|████████▊ | 169/191 [00:18<00:01, 14.88it/s]Batches: 90%|████████▉ | 171/191 [00:18<00:01, 14.47it/s]Batches: 91%|█████████ | 173/191 [00:19<00:01, 14.72it/s]Batches: 92%|█████████▏| 175/191 [00:19<00:01, 14.98it/s]Batches: 93%|█████████▎| 177/191 [00:19<00:00, 15.10it/s]Batches: 94%|█████████▎| 179/191 [00:19<00:00, 16.04it/s]Batches: 95%|█████████▍| 181/191 [00:19<00:00, 16.11it/s]Batches: 96%|█████████▌| 183/191 [00:19<00:00, 16.49it/s]Batches: 97%|█████████▋| 185/191 [00:19<00:00, 16.68it/s]Batches: 98%|█████████▊| 187/191 [00:19<00:00, 17.44it/s]Batches: 99%|█████████▉| 190/191 [00:20<00:00, 19.02it/s]Batches: 100%|██████████| 191/191 [00:20<00:00, 9.51it/s]

2023-05-13 08:09:55,134 - BERTopic - Transformed documents to Embeddings

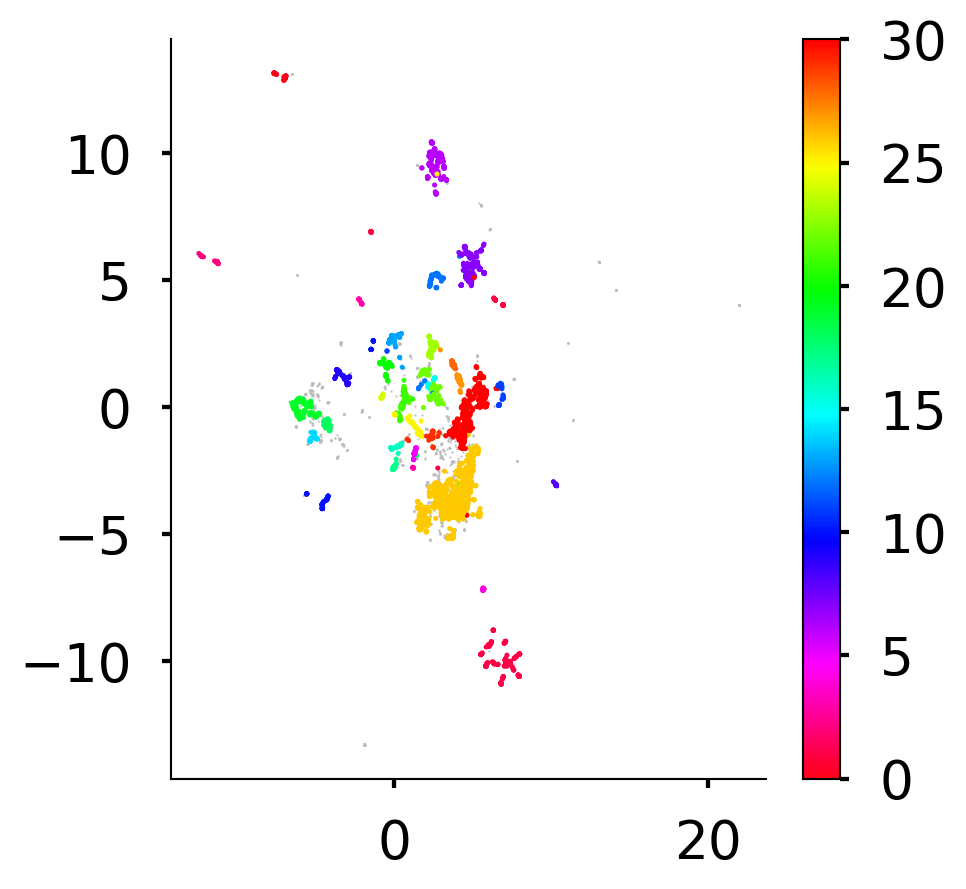

2023-05-13 08:09:57,705 - BERTopic - Reduced dimensionality

2023-05-13 08:09:58,621 - BERTopic - Clustered reduced embeddings

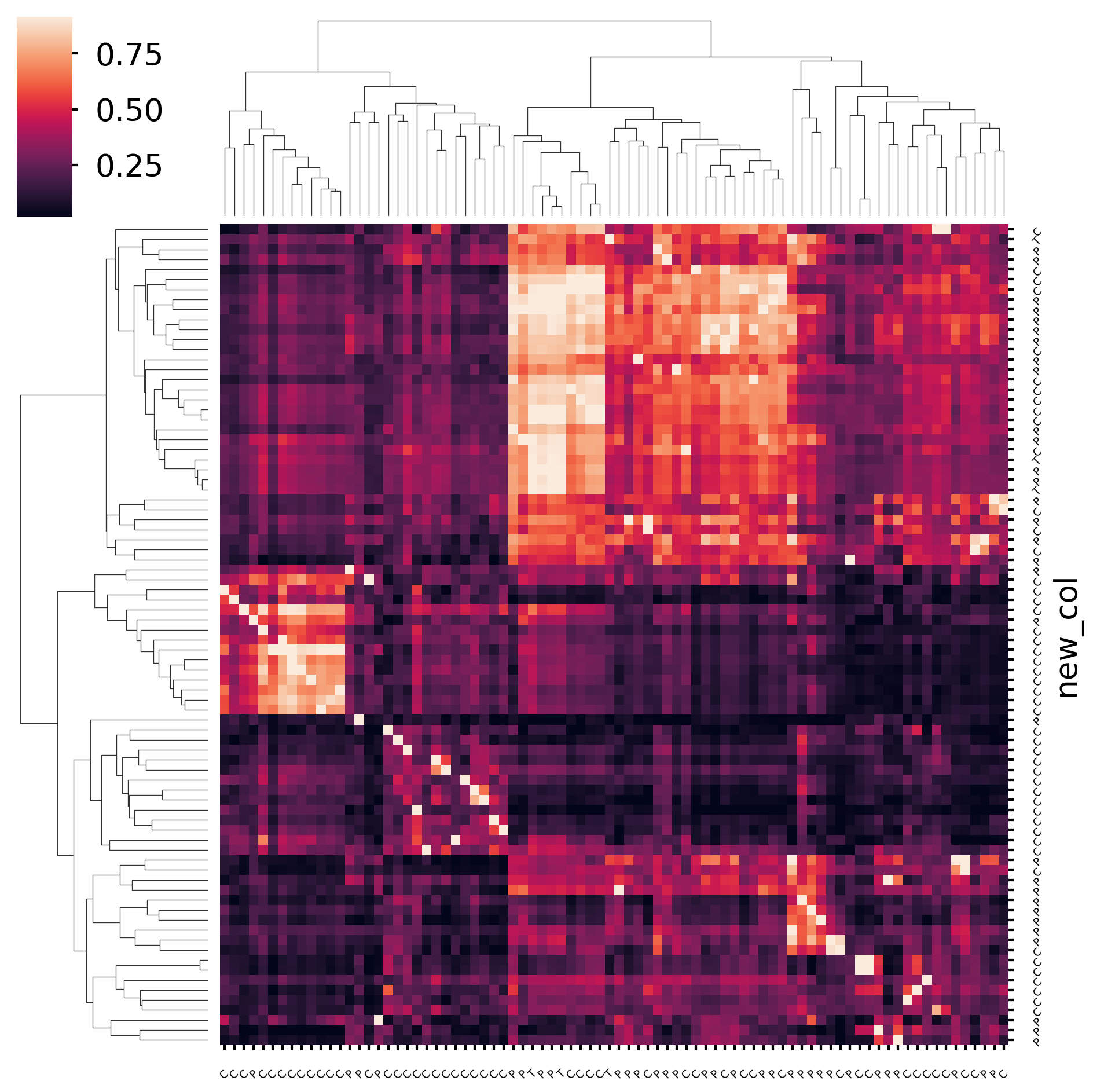

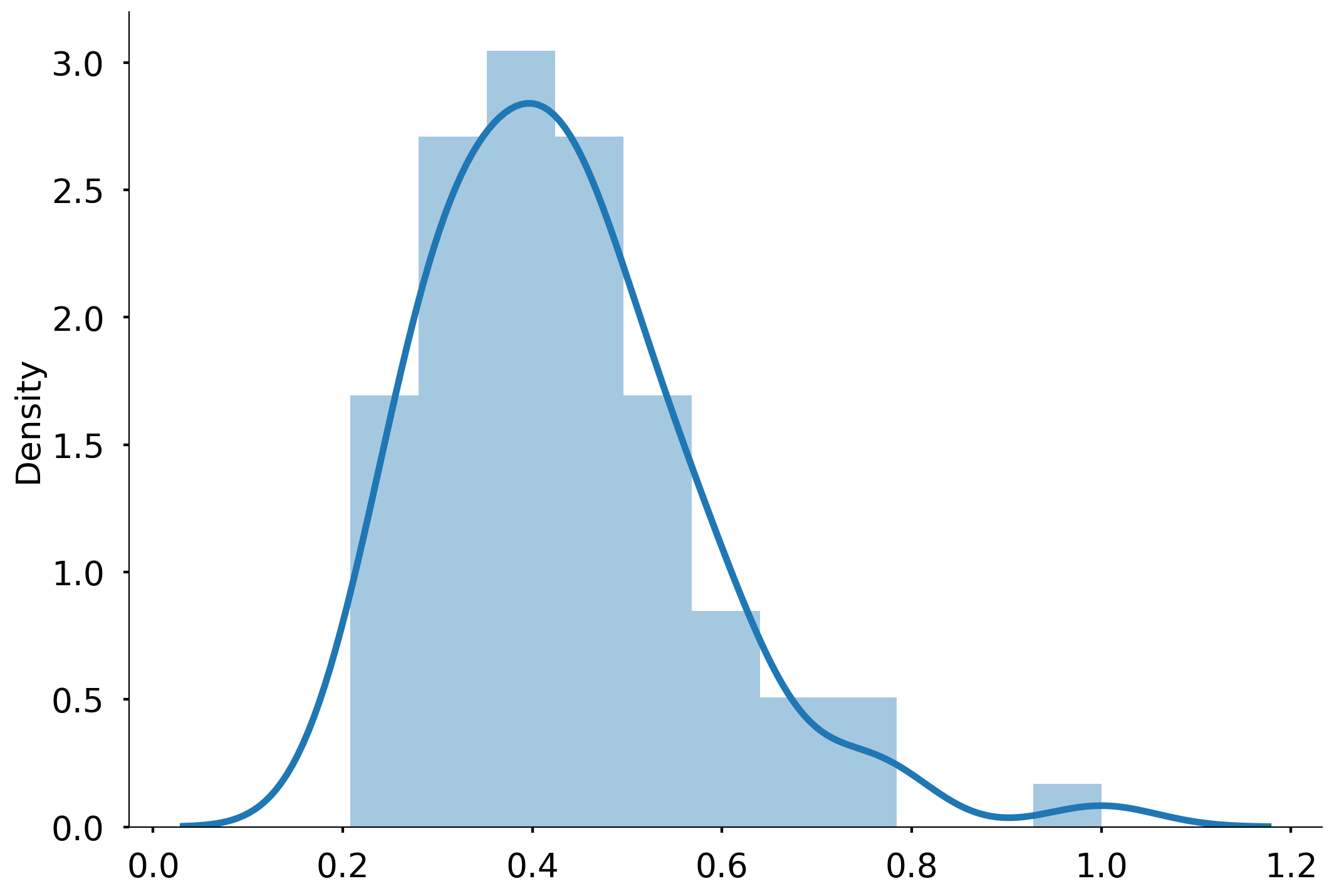

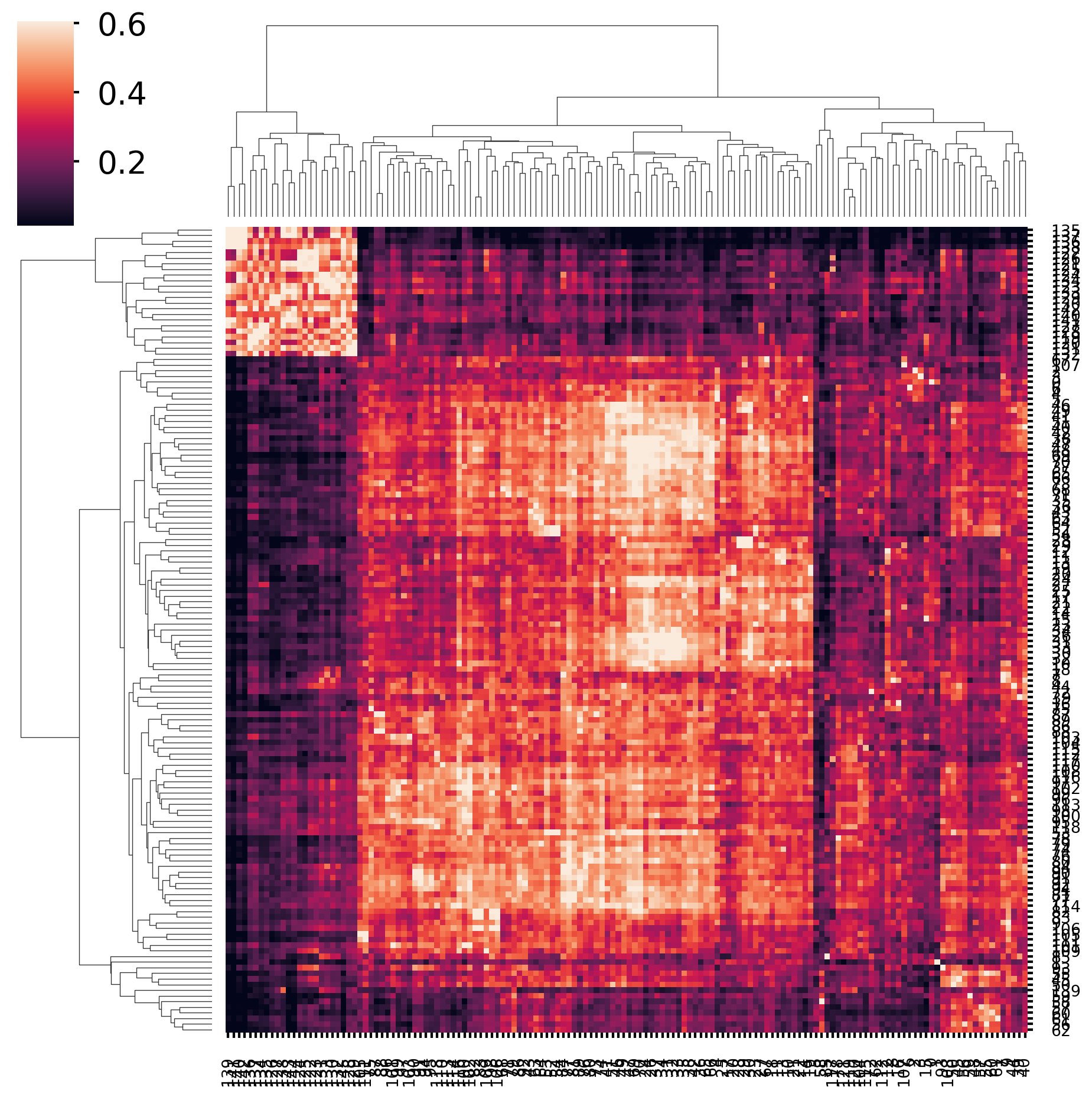

2023-05-13 08:10:00,407 - BERTopic - Reduced number of topics from 88 to 30